When a global retailer in the beauty industry needed a new and cost-effective data processing solution, they knew the search would require a high level of expertise. That is why they decided to seek advice from ABCloudz.

The company had a massive amount of data and utilized SAS software for data processing, both powerful but extremely high-priced solution. To add to the problem, qualified specialists with SAS experience are difficult to find, adding time and expense to any required updates. The critical nature of this shortage was driven home when the company found themselves unable to utilize their SAS solution 100% of the time because they couldn’t find qualified specialists. It also occurred right as their current SAS licenses were set to expire.

With their company’s progress and growth on the line, they had to quickly decide on a plan. There were two possibilities:

- Either prolong their SAS licenses and find experts to support the existing system. Or,

- Deactivate the licenses and find an alternative solution.

After careful consideration, it was determined to pursue migration to a Big Data solution that was both easier to support and more cost effective. By engaging a partner – ABCloudz – that specialized in big data solutions and who were very well equipped to handle projects with tight project deadlines, they were able to implement a solution. ABCloudz team had both the expertise and capability to quickly deploy a solution to keep things running smoothly and reduce the service required to maintain.

The process

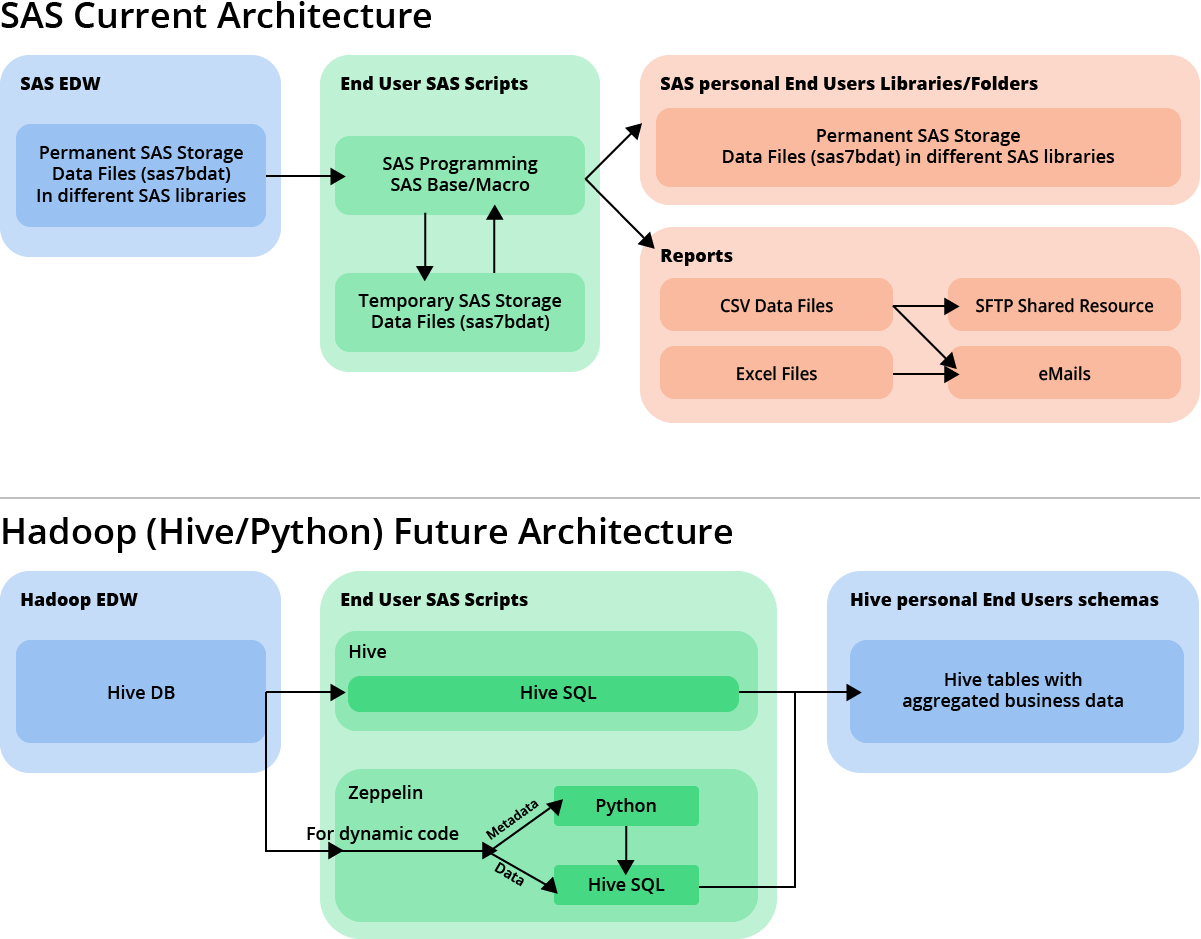

Once ABCloudz team got engaged with the project, they knew they had a very limited time. This was because SAS expertise was not readily available and because the license deadline was looming on the horizon. The team reviewed the project requirements and suggested a migration to Hadoop Big Data.

In addition to time constraints related to the license expiration, ABCloudz had to guarantee that the system at la minimum performed at the previous level. It was also vital to ensure that data quality did not degrade during the migration. And the SAS and the Hadoop Big Data solutions needed to run in parallel and the difference between the produced results was not to exceed 1%.

The beauty retailer was also adamant that the execution time must be less than the time to license expiration and should include enough time to test and validate the new solution. Due to a complexity of SAS, migrating from it to a different solution often results in performance degradation, a problem which ABCloudz team could not afford to encounter.

Obstacles

The customer provided 20 files of SAS code to ABCloudz team. These codes had to be run in a sequence each contained ETL code of different complexity. Another obstacle faced was that the files were all created by different people and contained heterogeneous data and called mostly to simple analytical functions.

After the initial analysis, ABCloudz team split those SAS files into 13 files of BI_DASH sequence and a dozen files containing other code. They also converted BI_DASH files to Python code. This is because in the Zeppelin environment Hive SQL code is produced to run in the Zeppelin SH interface. Finally, ABCloudz converted other SAS files to Hive SQL without any trouble as the SAS code used SAS Base statements with minimal SAS Macro statements.

Challenges

Every migration project has its challenges. In this case, there were dynamic scripts and considerable volumes of data to process. As the SAS and Hadoop systems operate on different logic formats, the ABCloudz team had to detect all the mismatches and provide solutions for them as well. Also, the data sources for SAS and Hadoop were different and that significantly influenced the process of comparison. And, the team had availability and configuration issues related to the stability of our customer’s initial solution build on SAS.

Processing NULL and missing values

The most common issue encountered was related to the different processing of SAS Missing values and Hive NULL values. For example, the following query in SAS returns all the rows and observations that are not equal to 0 including the missing values, while in Hive SQL it returns the rows that are not equal to 0 and not equal to NULL.

The most common issue we encountered was related to the different processing of SAS Missing values and Hive NULL values. For example, the following query in SAS returns all the rows and observations that are not equal to 0 including the missing values, while in Hive SQL it returns the rows that are not equal to 0 and not equal to NULL.

column1,

column2,

column3

FROM

table1

WHERE

column1 <> 0

Thus, the team had to modify the original query so that SAS and Hive systems produced the correspondent results.

column1,

column2,

column3

FROM

table1

WHERE

column1 <> 0

OR column1 IS NULL

Processing mechanics that can’t be directly converted to Hive SQL

SAS has a set of specifications not convertible to Hive SQL processing mechanics. From previous experience, the team knew that such cases referred to merge operators, conditional processing via SAS Base variables for intermediate calculations, “calculated” columns in “proc SQL”, etc. Because of these considerations, they knew that these cases require deep analysis and custom solutions.

For example, SAS SQL syntax allows for the following expressions, while Hive SQL does not allow for them.

col1 + col2 AS total1,

col3 + calculated total1 AS total2

col4 + calculated total1 AS total3

FROM

table1

Thus, for Hive SQL we had to expand this query and repeat all the calculations, as follows:

col1 + col2 AS total1,

col3 + col1 + col2 AS total2

col4 + col1 + col2 AS total3

FROM

table1

Limited transpose in SQL

The SAS system allows for transpose such as rewriting data from lines to columns while SQL doesn’t possess that functionality. The team also had several calls to “proc transpose” in the BI_DASH component that needed to be implemented. As SQL doesn’t allow for that, the team utilized functionality from the Pandas library. Thus, team members wrote Python code that transposed in SQL leveraging Pandas to retrieve additional metadata.

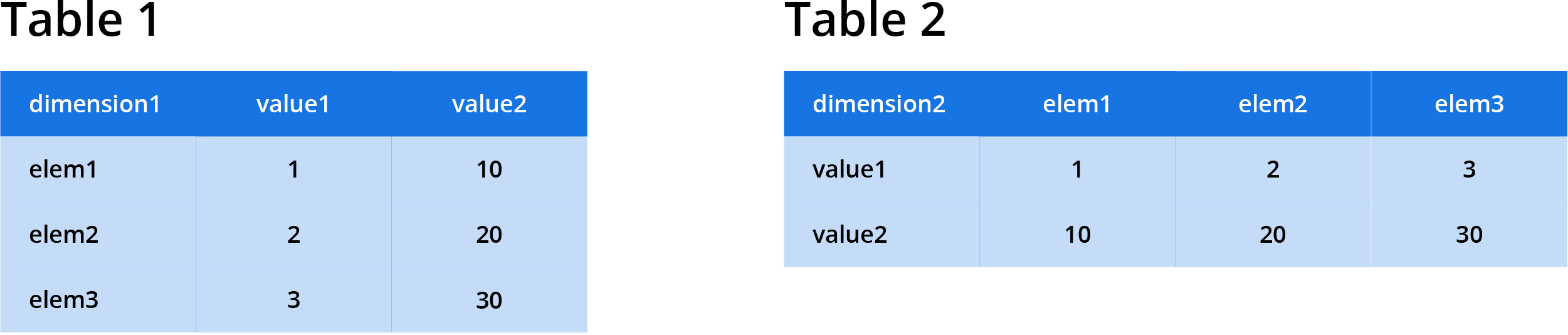

For example, to transpose Table 1 into Table 2 in SAS we use simple and flexible code:

id dimension1;

run;

It is crucial that the SAS code works properly even if we must extend the table or add more rows. For those cases the system produces a corresponding number of rows and columns. Hive SQL doesn’t provide for this functionality. So, to get the required results the team needed to use the following solution:

table2.dimension2,

SUM(table2.elem1) AS elem1,

SUM(table2.elem2) AS elem2,

SUM(table2.elem3) AS elem3

FROM

(

SELECT

'value1' AS dimension2,

CASE

WHEN dimension1 = 'elem1' THEN value1

ELSE NULL

END AS elem1,

CASE

WHEN dimension1 = 'elem2' THEN value1

ELSE NULL

END AS elem2,

CASE

WHEN dimension1 = 'elem2' THEN value1

ELSE NULL

END AS elem3

FROM

table1

UNION ALL

SELECT

'value2' AS dimension2,

CASE

WHEN dimension1 = 'elem1' THEN value2

ELSE NULL

END AS elem1,

CASE

WHEN dimension1 = 'elem2' THEN value2

ELSE NULL

END AS elem2,

CASE

WHEN dimension1 = 'elem2' THEN value2

ELSE NULL

END AS elem3

FROM

table1

) table2

GROUP BY

table2.dimension2

Hive SQL code is not as flexible as the SAS code. Thus, with the suggested solution, if we extend Table 1 the code just won’t process the newly added rows and columns. In addition, the Hive SQL code produces errors if columns are removed from the table. To address this, the team had to get creative and innovate an alternative way to provide for required options utilizing the Python code and Pandas library (transpose() method of Pandas DataFrame).

pandas_query = hc_query.toPandas()

pandas_query_transposed = pandas_query.transpose()

hive_context.sql("drop table if exists table2")

hc_query_target = hive_context.createDataFrame(pandas_query_transposed)

hc_query_target.registerTempTable("table2")

Customer’s benefits

The ABCloudz team completed the migration and smoothly switched the business users of the customer from SAS to Big Data. They strived to make that switch as painless as possible as the customer moved closer to the decommission deadline. Considering the amount of data the customers own, the migration required very meticulous work. In summary, in this project effort two facts definitively stand out:

Any system can be migrated

It’s possible to migrate any system even if it is unstable or under-configured. In this case, while ABCloudz team experienced difficulties in migrating a system where configuration didn’t allow for specific operations, the process was able to continue to a successful solution.

Equal performance

It’s almost impossible to create a Big Data solution that will work faster than a similar system created on SAS. Oftentimes, when migrating from SAS, system performance decreases up to 10 times compared to initial performance on SAS.

However, ABCloudz team managed to migrate the customer’s system from SAS to Hadoop SQL. The solution also required similar performance to the previous system with only a 1% difference. For a migration of this kind, results such as these are a tremendous undertaking but one that was done successfully. The result was a successful migration, completed on time, and with the performance level at or greater than the old SAS functionality well within the 1% variance. The customer didn’t lose their productivity and gained perfectly processed data and analytics without having any of the inconveniences caused by migration.