Custom AI Chatbot Development Using RAG

You have probably seen and heard about AI-powered chatbots that can converse with the user about website content, or some piece of knowledge related to a company. The world is full of buzz on all things AI, with chatbots driving the headlines.

In this article, we’ll share our experience building a full-blown solution from scratch that empowers such chatbots. The goal is to build a framework that can be plugged in with any knowledge sources and be easily integrated into web or mobile applications. If successful, it will help us to integrate it into our corporate website, intranet portal, software products, or our end customers’ solutions.

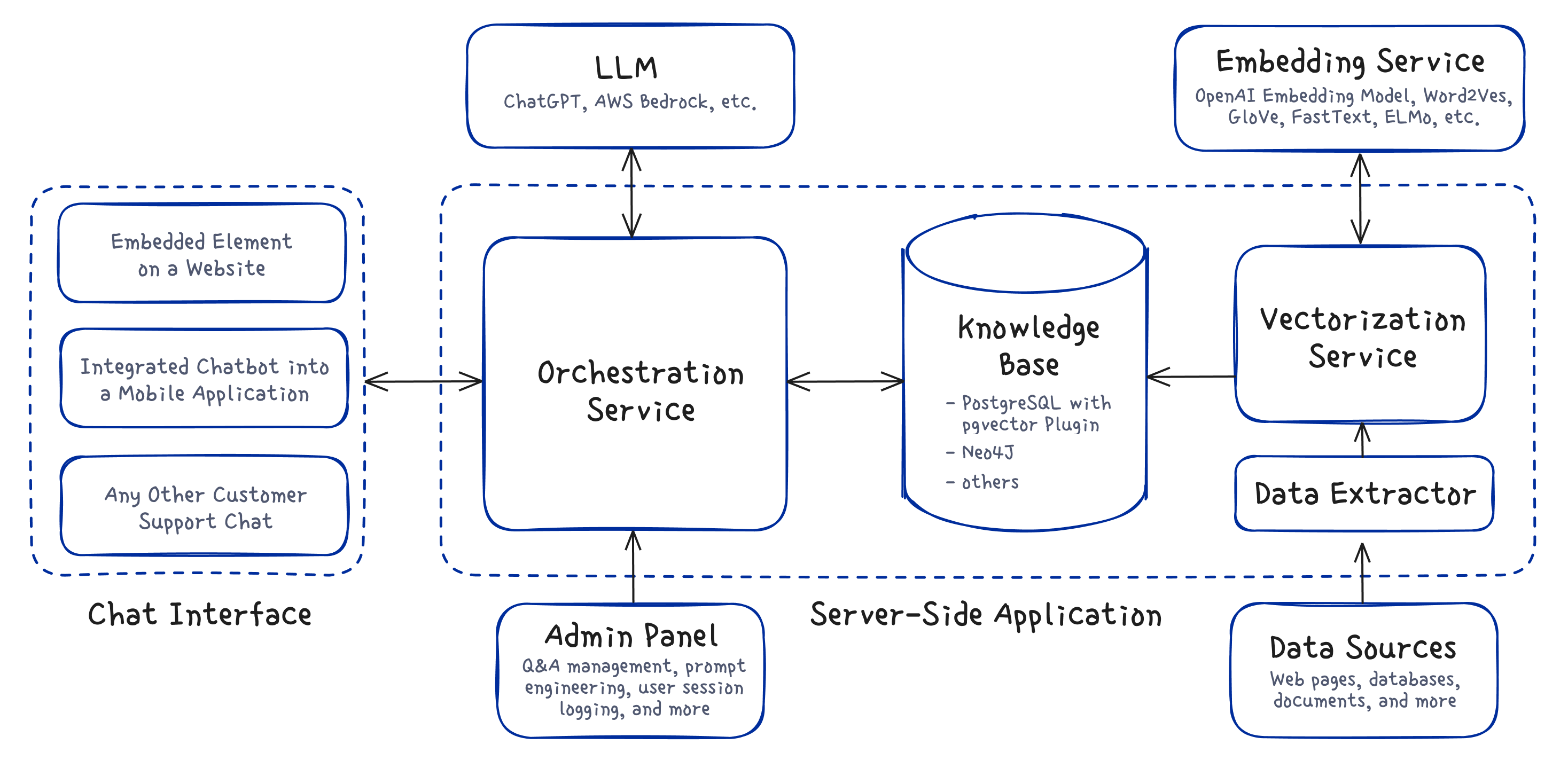

The chatbot is the visible user interface component that site visitors see, but it is just the tip of an iceberg. Underneath, there is a cloud-based infrastructure that includes web services, relational or graph databases, vectorization component, Large Language Model (LLM) access, prompt management, and an administration panel that orchestrates it.

What is Retrieval-Augmented Generation (RAG)?

Our solution is based on the Retrieval-Augmented Generation framework, which is a state-of-the-art approach for improving the accuracy and reliability of LLM. RAG lets you add extra context to the LLM prompt, specific to your domain, so the model can provide more accurate answers. This integration enriches prompts with a mix of context, historical data, and up-to-date knowledge beyond the LLM’s internal knowledge base, resulting in more accurate and relevant responses. This method works great with off-the-shelf models without the need to deploy and fine-tune your own LLMs.

For example, consider a user asking an LLM about the latest stock market trends. Without RAG, the LLM’s response would be limited to its pre-trained knowledge, which may be outdated. With RAG, the LLM can access real-time data from a financial database, providing a more accurate and up-to-date response.

General Overview of The Solution

We initially built this solution for internal purposes, enabling our website visitors to quickly access up-to-date and relevant information about our services, products, and job openings through a built-in chat interface. In this scenario, the chatbot would tap into a unique knowledge base made up of all our website’s current content.

Whenever a visitor interacts with the chatbot by asking a question, our Server-Side Application quickly delivers it to a language model, currently the ChatGPT-4 Turbo. This model deciphers the user’s question and then requests any needed information from the Server-Side Application to craft a complete answer. The Application retrieves the relevant context from the database and feeds it to the model. From there, the model either generates an answer or seeks additional information for a comprehensive response. Once it has enough details, the model formulates an answer, which we then deliver to the user via the chatbot interface.

This diagram illustrates the logical architecture of our solution, which consists of loosely coupled modules. Think of each module as a container, communicating with each other through standardized APIs. This allows us to independently customize the internal workings of each module, selecting the most fitting technology to tackle every client’s specific needs.

Let’s explore each module individually and discuss the readily available technology options for each.

Chat Interface

The chatbot interface supports two modes of integration:

Embedded Element on Website: This interactive chatbot widget is implemented using Node.js and ReactJS technologies, which can be seamlessly integrated directly into a webpage, providing visitors with immediate access to information and support without leaving the site.

Mobile Application: Integrating the chatbot into a mobile application involves leveraging native or cross-platform development frameworks such as Swift for iOS, Kotlin for Android, or React Native and Flutter for cross-platform solutions. Features like push notifications for updates, voice input, and leveraging device capabilities can further enhance the user experience, providing convenient and personalized access to support and services within the app.

Any Other Customer Support Chat: This encompasses a variety of other customer support platforms, such as messaging apps, social networks, ticketing systems, etc., which can also be integrated with the chatbot to offer automated support.

Large Language Model (LLM) Module

The Large Language Model module serves as an engine for natural language understanding and response generation. Engineered for easy swapping of language model providers, this module ensures flexibility in choosing the preferred underlying technology. This abstraction layer allows the system to integrate with various LLMs, including OpenAI’s ChatGPT, Amazon Bedrock, Azure AI Language, and Google’s Gemini, among others, without the need for significant modifications to the overall system architecture. Additionally, open-source alternatives such as Meta’s LLaMA 2, Salesforce’s XGen-7B, and Falcon Models, among others, are available and can be accessed through platforms and projects that support open-source initiatives, such as LangChain, ensuring a broad range of options for developers.

The LLM module deciphers what the user means, looking closely at language details and the question’s purpose. It considers the context of past exchanges provided with each query to keep the conversation on track. The module pulls facts from the Knowledge Base to make a fitting reply. It then forms a clear and relevant answer using its language skills.

Knowledge Bases for RAG-Based Solutions

In the context of leveraging external knowledge bases for RAG solutions, vector databases and graph databases stand out as two primary approaches.

Vector databases are designed for the efficient storage and swift retrieval of data represented as vectors, or embeddings. In this context, a vector is a digital representation capturing the semantic essence of a piece of textual information. Searching through these vectors enables the identification of information that closely matches in meaning, which is crucial for finding answers to complex queries within large datasets. For instance, a vector database can rapidly locate items in a vast collection of disparate products that are closely aligned with a detailed search query, based on specific attributes such as brand, price, category, etc. This principle also facilitates the provision of personalized product recommendations to customers, offering them options like the items they are currently viewing.

In our solution, we utilize PostgreSQL, augmented with the pgvector plugin, as our vector database. PostgreSQL is a powerful, open-source object-relational database system, celebrated for its proven architecture, strong reliability, data integrity, and comprehensive features. The addition of the pgvector plugin significantly enhances PostgreSQL’s capabilities by enabling efficient vector search and similarity ranking, vital for our information retrieval needs.

Neo4J, on the other hand, is a leader in the graph database technology space. Graph databases are optimized for handling highly connected data, enabling efficient representation and extraction of complex relationships between data points. This is especially beneficial in scenarios where context and the interconnections among knowledge elements are critical for understanding queries and formulating responses.

Moreover, we have also mastered the implementation of vector search within graph databases, enriching data points with its vector representation. By identifying data points that are semantically similar through vector search, we can then provide the LLM with deeper context by tracing the relationships between data based on specific characteristics. This combination of methods provides us with flexible and powerful tools for handling large datasets, where understanding the relationships between data points is crucial.

For example, in the home appliance retail sector, such a graph database, supplemented with vector search capabilities, can precisely find data by similarity with customer inquiries and then establish relationships between specific models of home appliances, user manuals, and solutions. By leveraging this approach, a specialized chatbot can offer precise instructions and solutions for appliance maintenance, providing users with efficient technical support services tailored to their specific needs. This is a real-world example of our solution in action.

The choice of knowledge base implementation depends on the specific needs of the business and the data being processed. If fast data retrieval through vector similarity is sufficient for your needs, PostgreSQL with pgvector is a good choice. However, if there’s a need to focus on identifying relationships within the data or a combination of both approaches, we recommend Neo4j. Keep in mind that we are not limited to these database solution vendors alone.

Now, let’s explore how our solution populates the knowledge base.

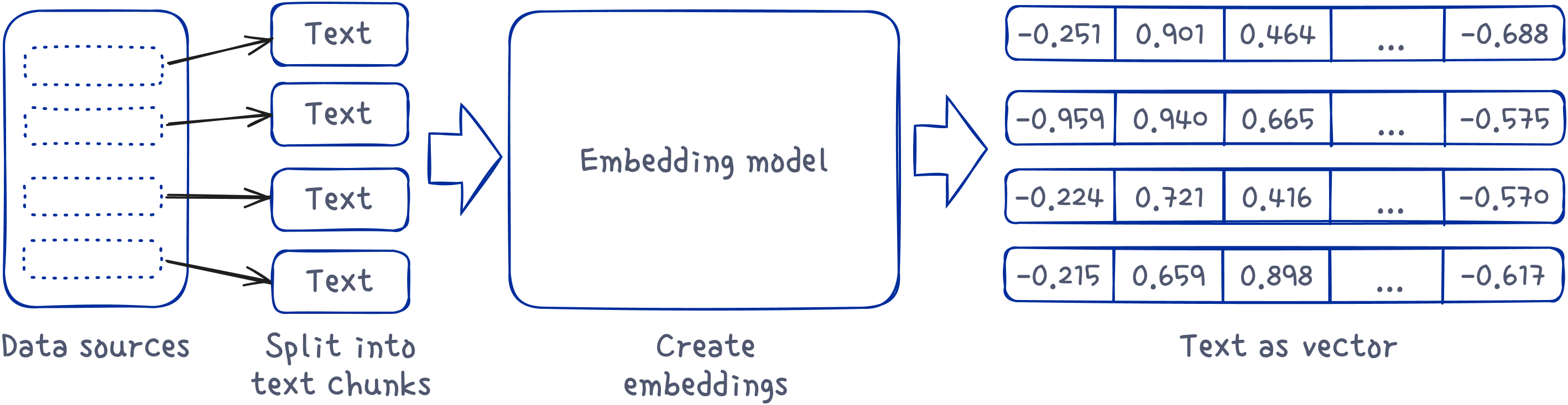

Data Extraction, Segmentation, and Vectorization

Our solution is designed to accommodate a wide range of knowledge sources, including websites, databases, text documents, PDF files, email archives, social media feeds, and many more. This versatility guarantees that, regardless of whether your data is located in structured databases or unstructured formats such as documents and web pages, our tools are adept at extracting, transforming, vectorizing, and loading the data into the knowledge base efficiently.

For instance, consider a company’s corporate website as the data source. Imagine we have previously extracted all the content from the site. Now, once a day or at another predetermined interval, the Data Extractor, a component of our Server-Side Application, analyzes the website content to identify any updates. It extracts text from each new or updated page on the site, segmenting it into paragraph-sized chunks. Subsequently, another part of our Server-Side Application, the Vectorization Service, interacts with an external embedding service by submitting these text chunks.

We leverage the latest model from OpenAI for content vectorization, though it can be replaced with any similar embedding service, such as Word2Vec, GloVe, FastText, ELMo, etc. Each resulting vector captures the essence of the text. Finally, both the textual and vectorized representations of each chunk are stored in the knowledge base.

Preparing data for a graph database does not significantly differ from preparing data for a vector database. Both processes involve breaking down pages into sections and further dividing those sections into chunks, with each chunk undergoing vectorization. The primary distinction lies in the data format used for storage: unlike vector databases, where data is stored in tables, graph databases store data as nodes.

Admin Tools Overview

The administrative panel is the control center for system administrators to shape and monitor our chatbot solution. It’s a comprehensive suite of tools designed for detailed management, allowing for fine-tuning of user experiences and system functionality. The tools of the Admin Panel described below are showcased in this demo video:

Question & Answer: In this area, admins can tailor the chatbot’s database of Q&A pairs. This is crucial for those queries that do not automatically retrieve answers from the existing data pool. By curating this content, admins ensure the chatbot can address common questions using the content from this area, rather than updating the Knowledge Sources (like website pages) . This section boasts sophisticated search and sorting capabilities, coupled with the ability to track user reactions, thus maintaining a highly responsive and user-focused service.

Documents: In the “Documents” section, an administrator has access to data extracted from original sources of information. Each document represents a fundamental unit of data origin, such as a webpage, a document, a database record, and so on. This feature enables the administrator to verify whether the latest updates in the data have been captured, especially if the original data is frequently updated. Detailed information is provided for each document, including its content and a link to the original data source. Additionally, documents can be disabled or enabled as needed.

Session Chats: This feature is a window into the chatbot’s logs of user conversations, offering admins the chance to review complete dialogues. By examining how users respond to the chatbot’s answers, administrators gain valuable insights into user satisfaction and areas for improvement. Additionally, the exportable logs serve as a resource for in-depth support and testing, enhancing the chatbot’s performance and reliability.

Chat: This is a chat interface that enables the testing of a chatbot directly from the admin panel. The chatbot’s response can be thoroughly analyzed, thanks to the provision of detailed information for each answer. For instance, it shows which data sources were used to generate this response and even the code executed for this request.

Settings: Admins can customize the greeting message here, setting the tone for user interactions. This feature is designed to ensure that first encounters with the chatbot are as inviting and friendly as possible, setting a positive tone right from the start.

Import and Export: The import and export features facilitate the convenient transfer of settings from one environment to another. This enables, for example, the easy application of settings tested on the staging version to the production version.

Template Questions: These are prompt options displayed at the very beginning of a new conversation with the chatbot, which the user can activate by selecting them. They also serve as hints, providing users with an understanding of the kinds of questions this chatbot is tailored to answer.

Overall, this administrative panel is a robust toolkit, empowering system administrators to customize and maintain the solution.

Cost Aspects and Deployment of LLM-Based Solution

You might naturally wonder about the cost of utilizing AI resources such as LLMs and vectorization services (creation of embeddings). We invite you to read our dedicated blog post on this topic.

Additionally, discover the unique deployment solution for our AI chatbot, which comes bundled with it.

Adding Chatbot to Your Knowledge Sources

The architecture of our AI chatbot is designed for rapid assembly and deployment. This efficiency stems from our method of customizing pre-developed modules instead of building them from scratch. Every component of our solution is adaptable to fit the unique scenarios of your business processes and the specifics of your data.

We welcome the opportunity to discuss your business challenges and explore how our solution can address them. Let’s unlock the potential of your data and leverage it for business growth. Together, we can tailor the perfect system setup to achieve your goals and navigate any operational challenges.

Contact us today to initiate the conversation.