Chatbots as they come are not something new under the sky. They have populated the web since long ago leaving behind an impressive trail of user annoyance and frustration. Wild repetitions, endless question-answer loops, and downright stupidity were the common laments to splash in jokes among the cubicle drones. The only improvements seemed to be expected from the further growth of output speed and size of Q&A database.

The recent breakthroughs in Artificial Intelligence (AI) brought the benefits of this technology from cosmic labs down to earth. Generative AI models now are proliferating here, there, and everywhere with ChatGPT as a major buzzword. While heavy lifters like OpenAI, Google, Microsoft, Amazon, and IBM are competing to lead the race, other, smaller, but nimble startup businesses are promptly adopting the achievements of AI giants to build and offer generative AI apps and services, including anti-AI content detectors, to wide public.

Keep reading this blog post to learn about the technical details involved in creating the initial version of the AI chatbot. If your interest lies in the second, more sophisticated iteration of this solution, we encourage you to check out our three-part blog series detailing its functionality, architecture, deployment, and cost calculation.

From Chat to Talk – Unlocking the Power of Generative AI

Due to breakthroughs in Machine Learning and Deep Learning algorithms, AI software learns to “understand” and respond to user requests.

Both, Machine Learning and Deep Learning are a subset of AI. While building their knowledge base, Machine Learning algorithms require human assistance on the way.

Conversely, Deep Learning goes further by stacking algorithms into layers to form a structure known as a neural network. Neural networks are capable of analyzing unstructured data with far better efficiency and almost zero human intervention.

Building an AI Bot – Thorns and Roses

The primary idea was to create an AI-powered customer service bot with conversational user interface (CUI) capable of understanding user queries and generating relevant outputs.

Building an app upon a conversational AI platform was a new experience and a challenge for our development team. We sourced and worked through dozens of information materials related to Natural Language Processing (NLP), Large Language Models (LLM) and existing conversational AI platforms before jumping headfirst into work.

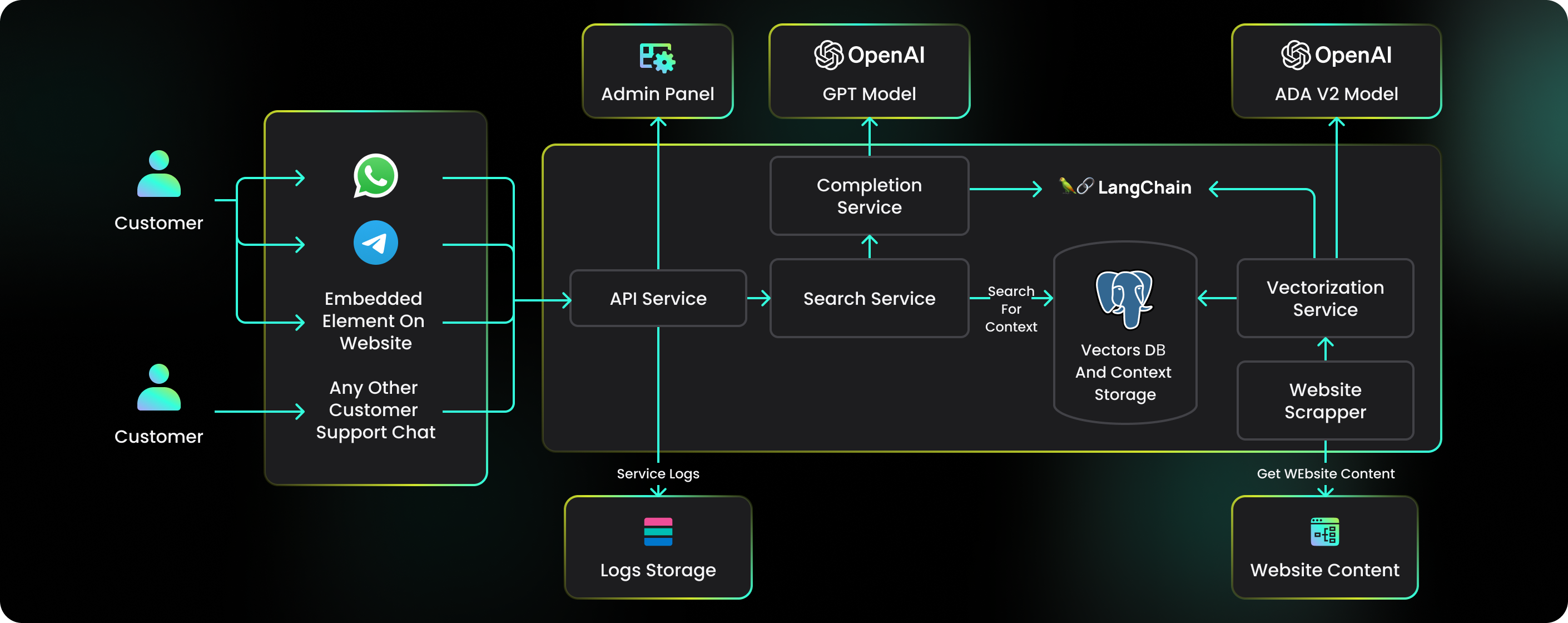

Considering the development strategy, our teams opted for OpenAI LLMs and the advanced tech stack. Armed with Node.js and ReactJS at the front end, NestJS at the back end, the PostgreSQL + PGVector, Redis, and LangChain Framework databases, placing OpenAI’s ChatGPT 3.5 Turbo and ADA v2 Models in the foundation.

Elaboration of the software architecture was built around the Retrieval Augmented Generation (RAG) architecture. RAG is an AI framework that enables LLM to independently search and retrieve relevant data from the web on top of the training knowledge base. In the process of user communication, RAG model matches the user query embeddings with the embeddings of documents stored in the knowledge base along the knowledge vector.

What are Embeddings and Knowledge Vectors?

Embeddings are numerical representations of unstructured datasets converted to a sequence of number values, which make it easy for computers to understand the relationships between individual datasets (or concepts). The word “cat” can be represented by several knowledge vectors, each containing a sequence of numbers, for instance, [0.2, 0.5, 0.7 …]. The distance between two values within a single vector indicates a degree of their relatedness. Small distances suggest high relatedness while large distances suggest low relatedness.

Embeddings are commonly used for:

- Search – search results are ranked by relevance to a query string

- Clustering – text strings are grouped by similarity

- Recommendations – items with related text strings are ranked as per recommendation

- Anomaly detection – outliers are identified

- Diversity measurement – similarity distributions are analysed

- Classification – text strings are classified by their most similar label

The most common use case for embeddings is search, where results are ranked by relevance to a query string. Suppose you have a collection of documents or site articles, and you want to find the most relevant ones for a given query.

To do this, you can follow these steps:

- Get embeddings for all your documents and store them in a vector database.

- Get an embedding for your query string and send it to your vector database.

Then you can retrieve the documents with the smallest distances, i.e., highest similarities, to your query embedding to be your search results.

Workflow

The AI Chatbot workflow implements the following actions:

- Normalize user queries stored in the chat history by converting them in the Langchain Question Generator

- Find matching context in the embedding database

- Send the context-appended normalized user query to OpenAI gpt-3.5-turbo model to get a response

The Question Generator is a component of the Langchain library. It generates standalone questions based on the query context. The generated question is appended to the user query and the resulted “normalized” user query is further used to retrieve relevant documents from the database.

Finally, the retrieved documents and the normalized query are passed to the language model for it to generate a relevant response.

Embedding Model

Meet text-embedding-ada-002

The embedding model named text-embedding-ada-002 is the newest OpenAI achievement in embedding technology. It outperforms each of its five other preceding models at most tasks while uniting all their functional benefits in one solution.

The Advantages of text-embedding-ada-002

- Higher performance. Text-embedding-ada-002 leaves all the previously released embedding models behind in text search, code search, and sentence similarity tasks and has comparable performance in text classification.

- Joint capabilities. Text-embedding-ada-002 simplifies the interface of the embedding creation endpoint by merging five separate models into one.

- Longer context. The context length of text-embedding-ada-002 is increased X4, from 2048 to 8192 tokens, introducing more convenience into working through long documents.

- Reduced embedding size. The produced embeddings are eight times smaller in size as compared to those of the most powerful OpenAI DaVinci model, making operation of vector databases more cost-efficient.

- Lower price. The price of text-embedding-ada-002 is reduced by over 90% as compared to old models of the same size.

Overall, text-embedding-ada-002 embedding model is an outstanding powerful tool for natural language processing and code tasks enabling creation of more capable applications in the respective fields.

Database

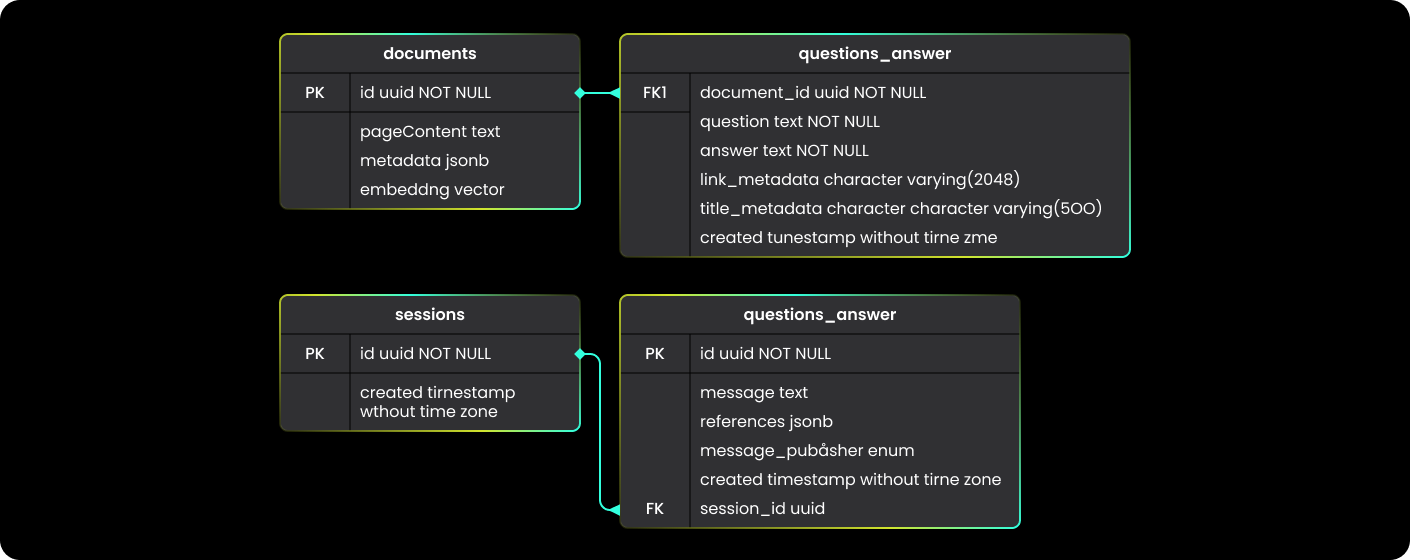

The AI Chatbot architecture encompasses integration of PostgreSQL with PGVector extension, Redis, and LangChain Framework databases. The database table schema is as follows.

Where:

- documents: the main table where vectors contexts are stored

- question_answer: additional context storage, see below

- sessions: primary key table for storing sessions (chats)

- message: chat messages storage table

Creating Embeddings from Website Data

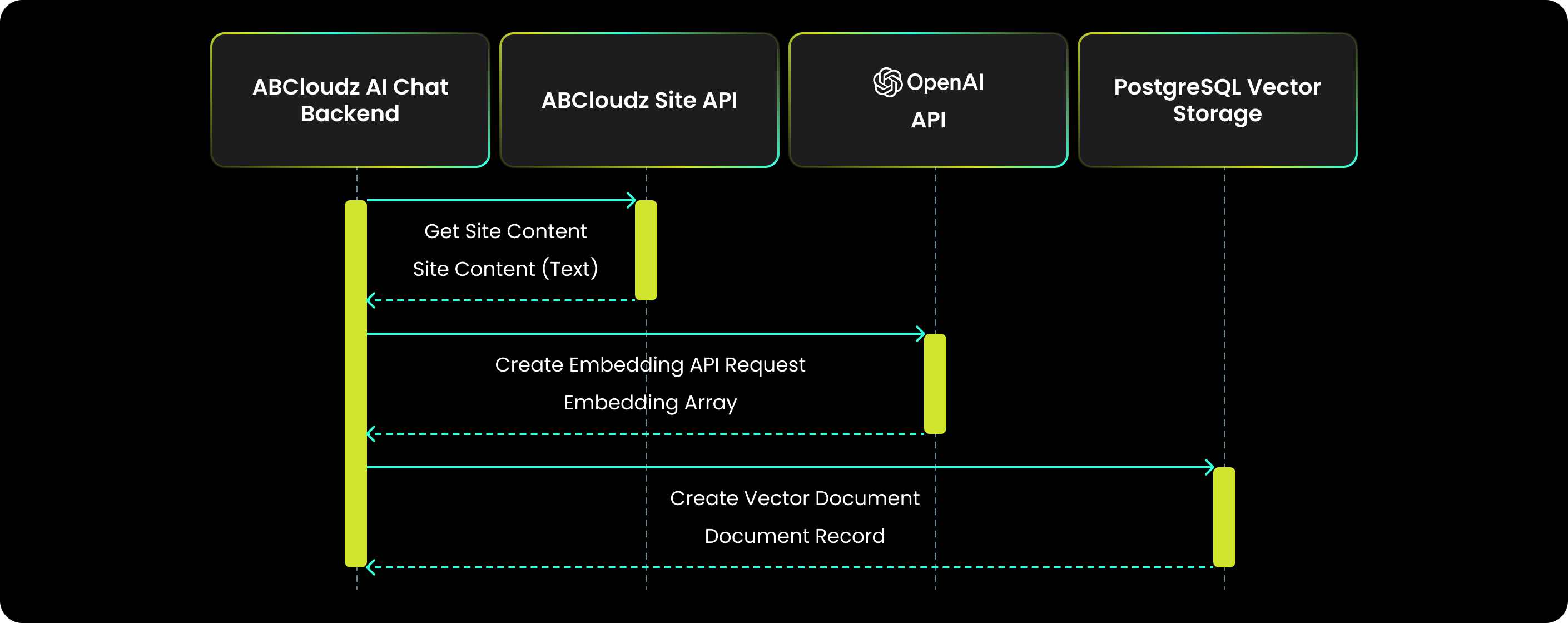

Every midnight, the AI Chatbot backend runs re-indexing the abcloudz.com content to create and update embeddings.

The indexing process is shown on the diagram below:

The AI Chatbot backend calls ABCloudz API to retrieve site content. Having pushed it through the Langchain framework, it sends normalized requests to OpenAI embedding model to create data vector embeddings. Received embeddings are stored to the DB.

This process iterates for each indexed text piece, i.e., phrase, sentence, paragraph, article, etc.

Question and Answer Contexts

To improve AI Chatbot responses by providing the system with more contexts for answering frequently asked questions, the Q&A page with related tables has been created.

Each Q&A record contains:

- Question

- Answer

- Link URL

- Link Title

The Q&A records are indexed and added to the database in the same way as any other website content.

Session Storage

Chat sessions with the user are stored in the Redis temporary storage and persistently in the PostgreSQL database. The temporary storage is used for generating questions based on the queries saved in the chat history.

Chat API Limits

To avoid API throttling, the number of requests to go to the application API is limited to one chat query at a time per session. Other requests, if they come, are denied with HTTP 400 error.

Dancing with AI – Model Training and Fine-Tuning

When the basic development phase was over, the developers proceeded to the exciting but challenging stage of the project – the AI model training and fine-tuning.

The most extensive part of language model training consists in feeding it large amounts of unstructured data, usually, retrieved from the web. for a model to extract and learn text patterns and associations.

Since we employed already trained OpenAI models (text-embedding-ada-002 and gpt3.5,4), our task was to provide their fine-tuning. This phase required us to create and integrate:

- well-structured, classified, and searchable documents on the relevant topics used by a model to generate a response

- well-formed query templates (prompts) to form requests for the LLM models

It was all finalized with extensive chatbot operation testing by our QA and user teams.

AI-Powered Communication Tool

For further improvement of AI Chatbot tool performance in action, we geared it to the abcloudz.com website back end. This implementation allowed our website visitors now to enjoy an enhanced means of communication while we got valuable marketing feedback.

The Retrieval Augmented Generation framework provides great flexibility for prompt engineering. This allows the AI Chatbot to operate in different roles: support engineer, sales manager, fitness coach, legal adviser, etc. It makes the AI Chatbot solution applicable in practically every industry, from Trade and Finance to the entire public sector, especially Education, Healthcare, and Public Administration. Depending on an intended implementation, besides a website AI chatbot assistant, the solution architecture may be further modified to provide for other channels of user communication via phone, or messenger.

This out-of-the-box, scalable conversational AI solution is easy to embed into a web app to cater for both sides of software users: website visitors and bot operators. App users get a 24/7 comfortable service of an intelligent, prompt online virtual assistant, while chatbot operators, besides eliminating pressure from their Customer Support personnel and encouraging user communication, receive valuable feedback and stats for marketing purposes.